tl;dr

Like Googling, LLM prompt engineering is a basic skill that will become indispensible to personal and professional projects as LLMs become more entrenched in our computing activities. I created an LLM frontend which uses Google Sheets to trigger and record prompt iterations. Systematically develop an intuition for prompting and track/share your progress without additional effort.

Why bother?

Large Language Model (LLM) ecosystems have recently shown incredible progression in their capabilities even within the past year. The core function of many human endeavors is about to change fundamentally as it becomes faster, cheaper, and more reliable to use LLM-tech to do things that once depended on many different unpredictable human factors.

An easy way to learn about the many possibilities of LLMs is through hands-on interaction and a comparative study of different models and paradigms. You can quickly discover all the the nuances and limitations simply by experimenting with:

- different parameter scales (ex: 7B params, 13B params, 70B params all behave differently)

- different vendors (ex: GPT-4 from OpenAI is state of the art but much more restrictive than other vendors)

- different prompting techniques (reply behavior is heavily influenced by small changes in the user input)

Limitations of typical LLM interactions

User facing LLM apps have mostly converged into a sort of chat cycle with the LLM. While this process works well for casual conversation, when you want predictable and repeatable behavior it is best to avoid long drawn out conversations and instead use specific (zero-shot / few-shot) prompt styles. These styles have the potential to be further developed into templates. Achieving this can be difficult, time-consuming, and costly.

But the biggest hurdle is the design of the default chat interaction, which forces a flow-of-thought style conversation. Knowing that LLMs are stateless, it is necessary to apply prompts as the first messages sent to the LLM in individual sessions.

Using Google Sheets

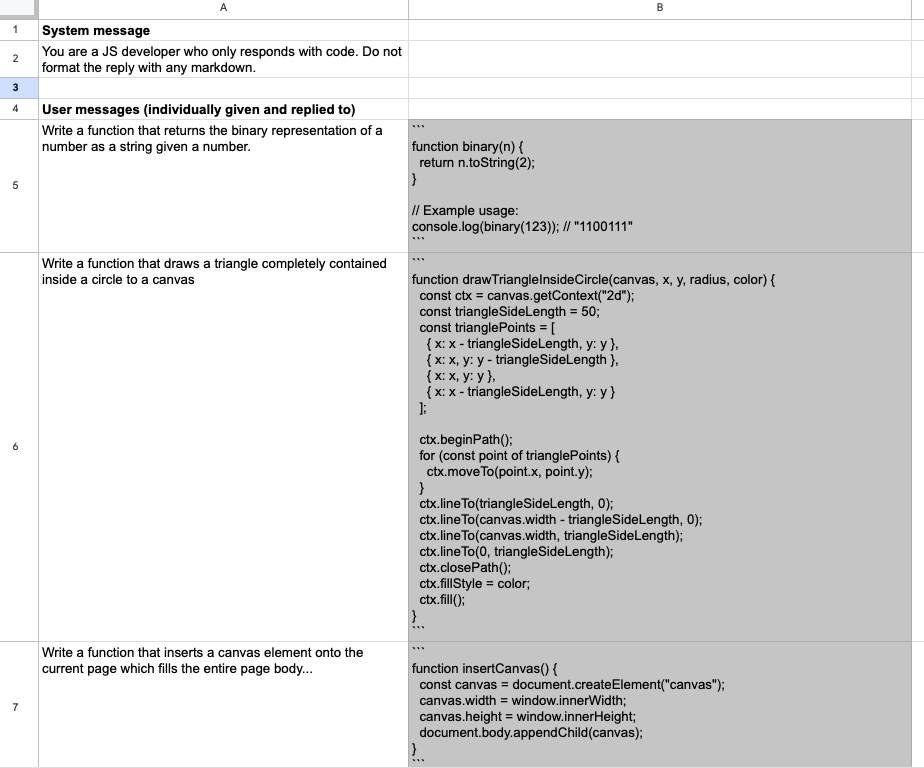

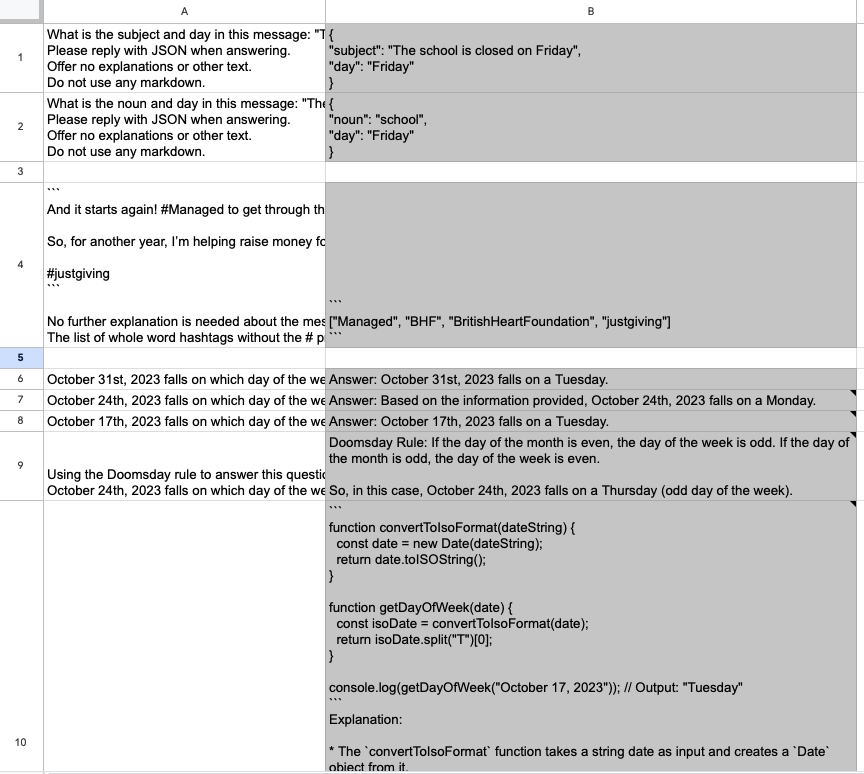

If you want to quickly compare how different inputs will affect the LLM response behavior, you would need a way to see the prompts and replies side by side. With Google Sheets being familiar to the majority of computer literate people, we can let it handle all the tabular data heavy lifting by making it the frontend. Sprinkle in some spreadsheet scripting and we can create our own fast-feedback-loop tool and then use it to record, compare, and improve our prompting.

There are existing tools that allow you to use LLMs within cells as a spreadsheet formula function: GPT-google-sheets, LLM for Sheets, etc. and I acknowledge them but we can do better.

The improvement is simple: forgo the formula step and offer the LLM reply automatically within the sheet.

Prerequisities

-

You should already have access to an LLM through an API.

There are many options available and you should choose one that best fits your use case. For instance, I’ve used the free (as in free beer)llama-2-chat-7b-int8model available through the Cloudflare Workers AI service. Using CF Workers AI allows me to create an API server with some basic authentication that can be accessed from anywhere on the Internet. But you could just as easily adapt this to use GPT-4 or GPT-3.5 Turbo. -

You should also have a blank Google Sheets document.

How it works

For faster-feedback, we want to be able to enter prompt text into a cell and then have the spreadsheet automatically use the text to feed into the LLM API. When it gets a reply back from the API, it should insert the reply into the cell directly to the right of it and apply some sort of styling to make it clear to the user that the text in this cell was a reply from the LLM.

In our example, the LLM API supports:

- Chat interactions

- Input is a list of system / user / assistant messages

- Output is the most probable next message according to the LLM

- Text completion interaction

- Input is a block of text

- Output is the most probable block of text directly following the input

This tool should be built to support both interaction styles. Let’s go!

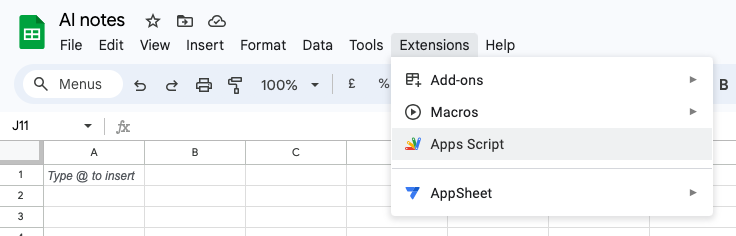

1. From your Google Sheets menu, open Extensions > Apps Script

2. Paste code into your editor and save it with (Ctrl / Cmd + S)

You will now have a function that can be triggered by a spreadsheet event. Adjust the API URL and authentication parameters for your use case accordingly.

const API_ORIGIN = 'https://your-api-here';

const API_AUTH_PAYLOAD = {

user : '',

pass : '',

};

function callAPI(endpoint, data) {

return UrlFetchApp.fetch(API_ORIGIN + endpoint, {

'muteHttpExceptions': true,

'method' : 'post',

'contentType': 'application/json',

'payload' : JSON.stringify({

...API_AUTH_PAYLOAD,

...data

})

})

.getContentText()

.trim();

}

function getTextCompletionReply(e) {

const currentCell = e.range.activateAsCurrentCell();

const value = currentCell.getValue();

if(value.length === 0) return;

currentCell.offset(0,1)

.setBackground('#ffffcc')

.setValue('...');

// Logger.log(value);

const answer = callAPI('/completion', {prompt: value});

Logger.log(answer);

currentCell.offset(0,1)

.setBackground('#cccccc')

.setWrap(true)

.setValue(answer);

}

function getChatReply(e) {

const currentCell = e.range.getCell(0,0);

const value = currentCell.getValue();

// Ignore headings

if(currentCell.getFontWeight() === 'bold') return;

if(value.length === 0) return;

const systemMessage = e.range.getSheet().getRange('A2:A2').getValue().trim();

currentCell.offset(0,1)

.setBackground('#ffffcc')

.setValue('...');

// Logger.log(value);

const answer = callAPI('/chat', {messages: [

{role: 'system', content: systemMessage},

{role: 'user', content: value}

]});

Logger.log(answer);

currentCell.offset(0,1)

.setBackground('#cccccc')

.setWrap(true)

.setValue(answer);

}

function replyWithLLM(e) {

const rangeSheet = range.getSheet();

switch(rangeSheet.getName()){

case 'Text completion testing (llama-2-chat-7b-q8)':

return getTextCompletionReply(e);

case 'Chat reply testing (llama-2-chat-7b-q8)':

return getChatReply(e);

default:

Logger.log('Event ignored, not applicable to this sheet');

}

}

4. Create a trigger for your code.

- In the left-hand sidebar, go into the

Triggerstab - Click on

Create a new trigger - Select the function to run as

replyWithLLM - Select the event type as

On edit - Leave all other options as default and click

Save

This trigger will fire whenever we change the value of a cell anywhere in our spreadsheet.

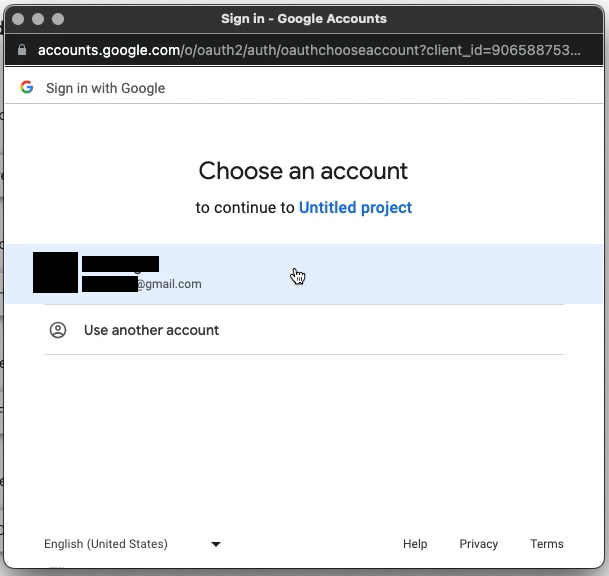

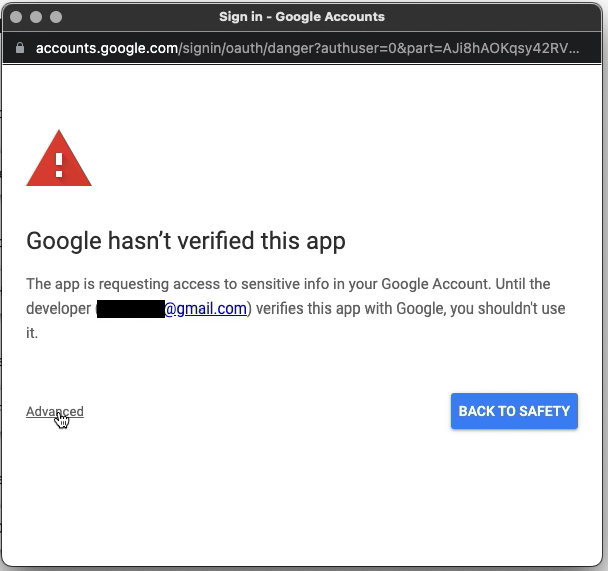

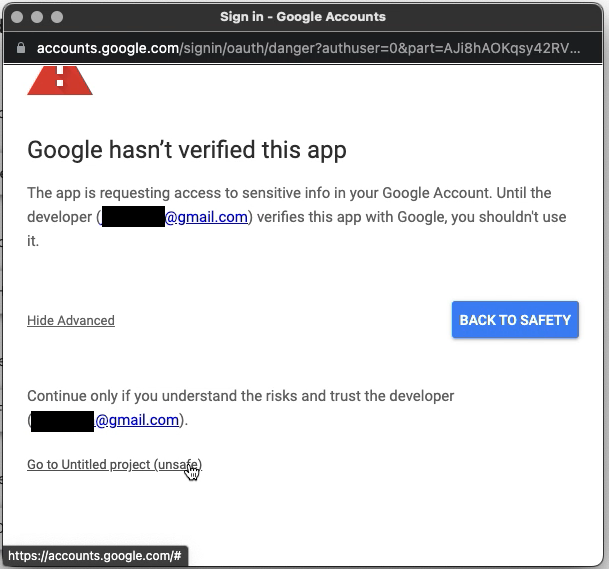

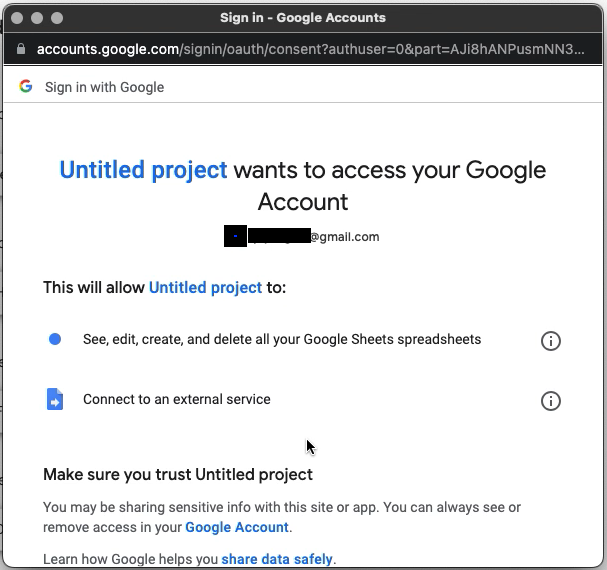

5. Allow your Apps Script project to access your Google Sheet

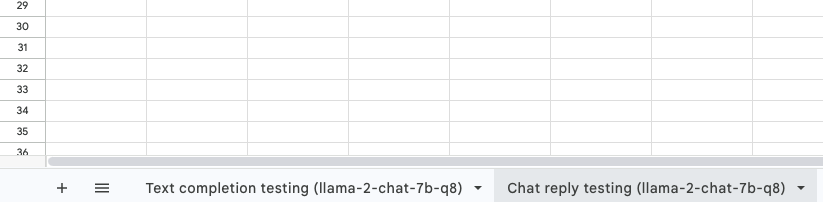

6. Name your sheets according to the script so they’re permitted to insert the LLM reply within the appropriate sheet

You can change the names to suit your own use, just make sure that the name within the Apps Script code and the name of the sheet matches!

...

function replyWithLLM(e) {

const rangeSheet = range.getSheet();

switch(rangeSheet.getName()){

case 'Text completion testing (llama-2-chat-7b-q8)': // <<<<<<<<<<<<<

return getTextCompletionReply(e);

case 'Chat reply testing (llama-2-chat-7b-q8)': // <<<<<<<<<<<<<

return getChatReply(e);

default:

Logger.log('Event ignored, not applicable to this sheet');

}

}

Your prompt honing sheet is now ready to use!

Here you can see it replying inline within the sheet so we don’t break our prompt refinement flow: